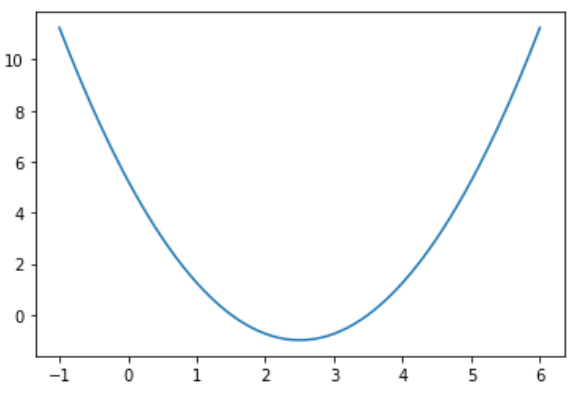

假设损失函数J是这样的:

import numpy as np

import matplotlib.pyplot as plt

plot_x = np.linspace(-1, 6, 141)

plot_y = (plot_x - 2.5) ** 2 -1

plt.plot(plot_x, plot_y)

plt.show()

梯度下降算法实现

def dJ(theta):

return 2*(theta - 2.5)

def J(theta):

return (theta-2.5) ** 2 -1

eta = 0.1

epsilon = 1e-8

theta = 0

while True:

gradient = dJ(theta)

last_theta = theta

theta = theta - eta * gradient

if (abs(J(theta) - J(last_theta)) < epsilon):

break

print (theta)

print (J(theta))

输出结果:

2.499891109642585

-0.99999998814289

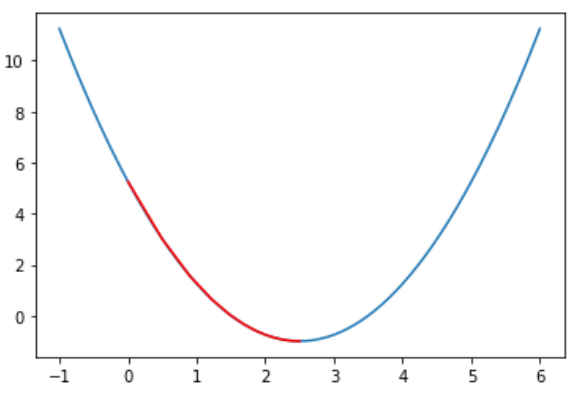

在图是显示所有的theta的轨迹

def gradient_descent(initial_theta, eta, epsilon=1e-8):

theta = initial_theta

theta_history = [theta]

while True:

gradient = dJ(theta)

last_theta = theta

theta = theta - eta * gradient

theta_history.append(theta)

#print (theta)

if (abs(J(theta) - J(last_theta)) < epsilon):

break

return theta_history

def plot_theta_history():

plt.plot(plot_x, J(plot_x))

plt.plot(np.array(theta_history), J(np.array(theta_history)), color='r')

plt.show()

eta = 0.01

theta_history = gradient_descent(0., eta)

plot_theta_history()

输出结果:

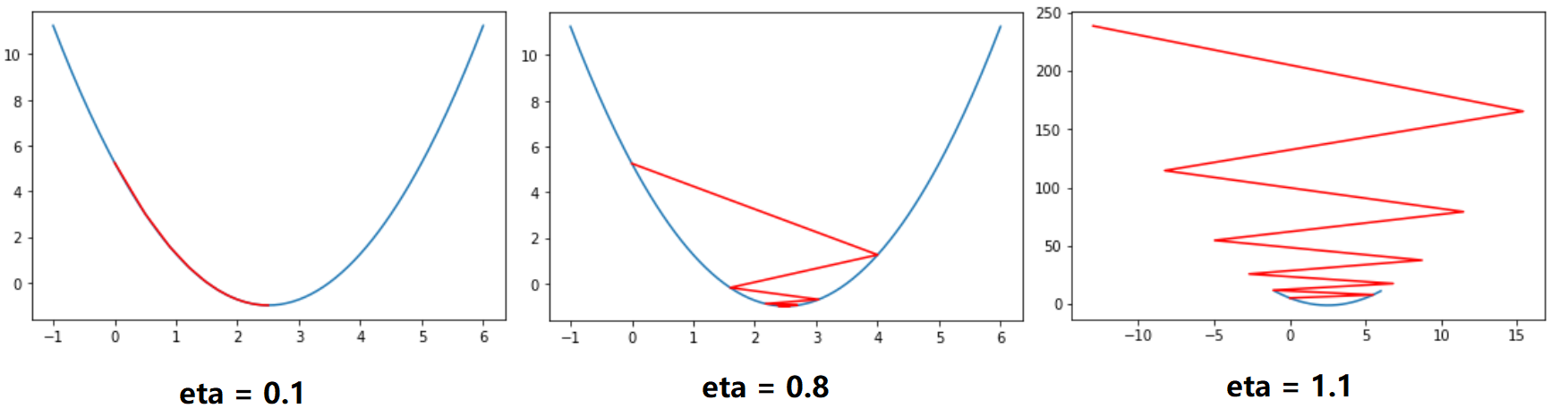

eta取不同参数的绘制结果

def gradient_descent(initial_theta, eta, n_iters = 10, epsilon=1e-8):

theta = initial_theta

theta_history = [theta]

i_iter = 0

while i_iter < n_iters:

gradient = dJ(theta)

last_theta = theta

theta = theta - eta * gradient

theta_history.append(theta)

print (theta)

if (abs(J(theta) - J(last_theta)) < epsilon):

break

i_iter += 1

return theta_history

输出结果: